Example Variables(Open Data):

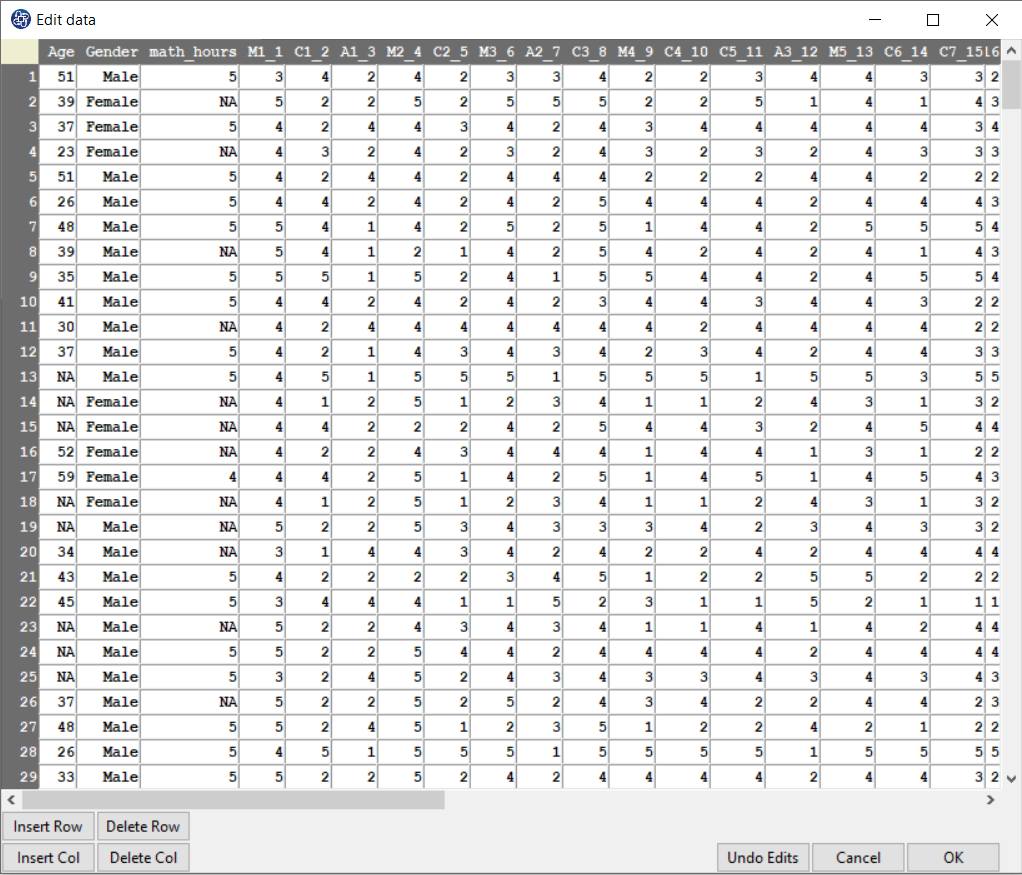

The Fennema-Sherman Mathematics Attitude Scales (FSMAS) are among the most popular instruments used in studies of

attitudes toward mathematics. FSMAS contains 36 items. Also, scales of FSMAS have Confidence, Effectance Motivation,

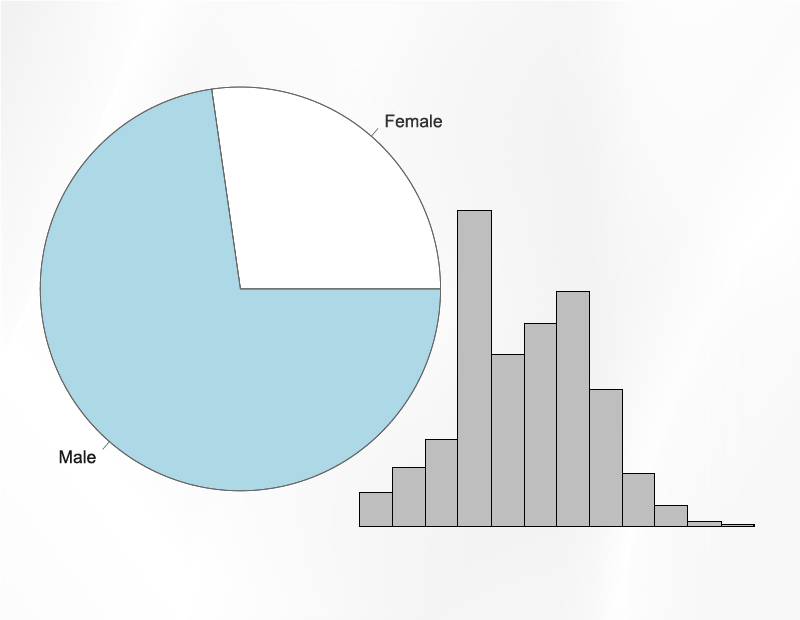

and Anxiety. The sample includes 425 teachers who answered all 36 items. In addition, other characteristics of teachers,

such as their age, are included in the data.

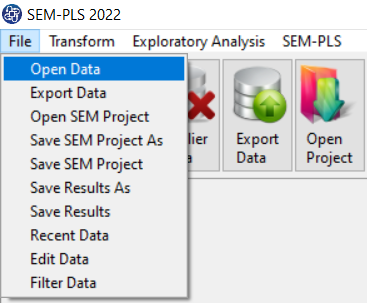

You can select your data as follows:

1-File

2-Open data

(See Open Data)

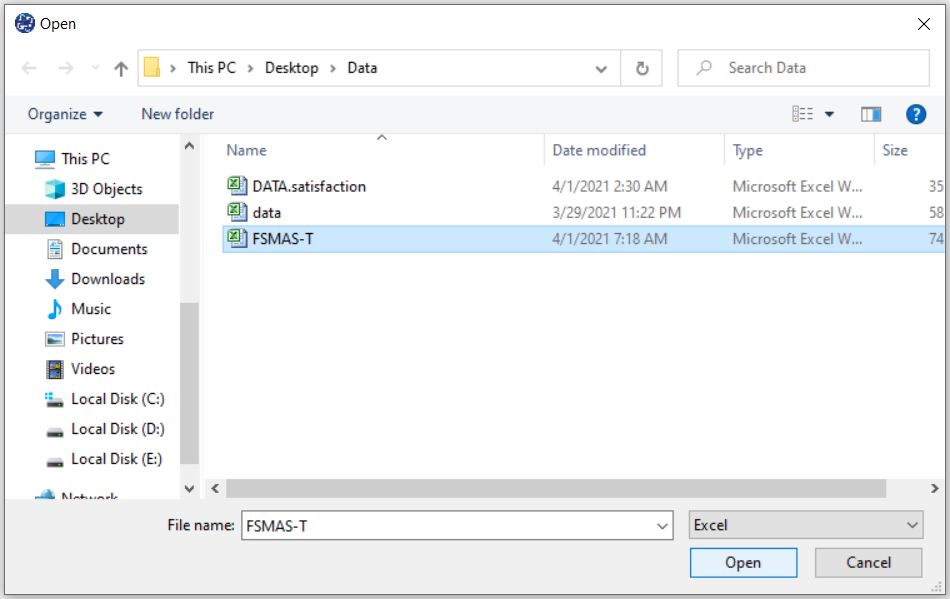

The data is stored under the name FSMAS-T(You can download this data from

here ).

You can edit the imported data via the following path:

1-File

2-Edit Data

(See Edit Data)

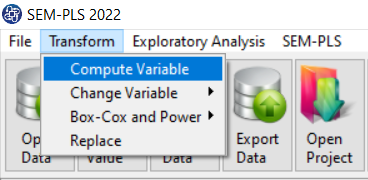

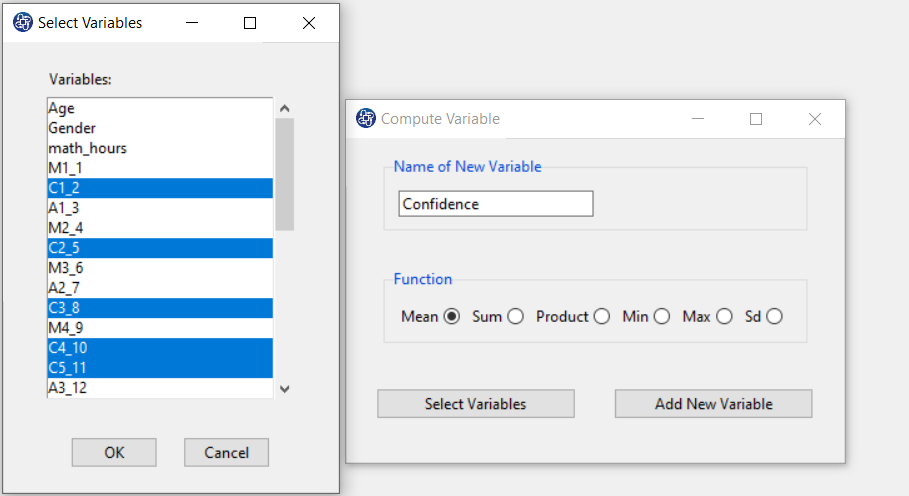

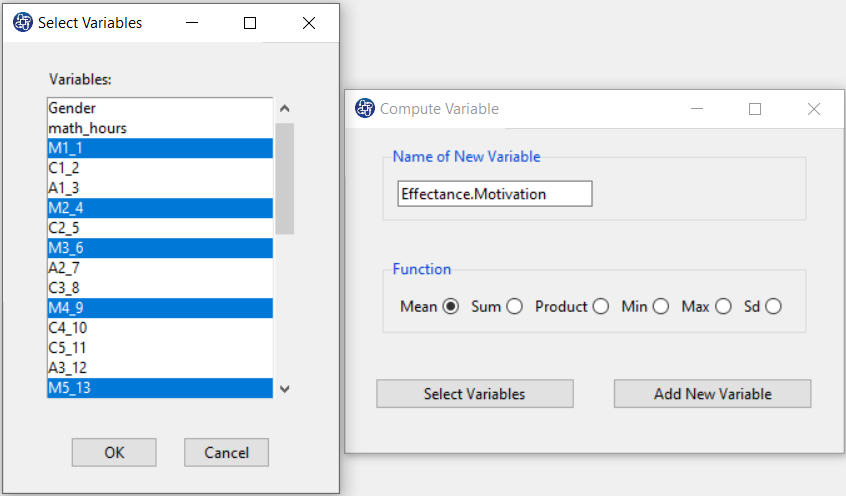

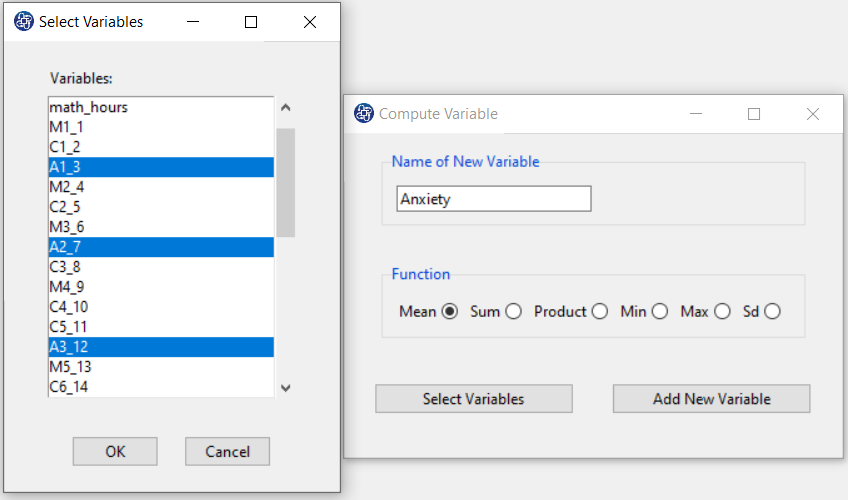

Example Variables(Compute Variable):

The three variables of Confidence, Effectance Motivation, and Anxiety can be calculated through the following path:

1-Transform

2-Compute Variable

Items starting with the letters C, M, and A are related to the variables Confidence, Effectance Motivation, and Anxiety, respectively.

(See Compute Variable)

Introduction to SUR(Seemingly Unrelated Regressions) Regression:

This method is a generalization of a linear regression model that consists of several regression equations.

Each equation is a valid linear regression on its own and can be estimated separately, which is why the system

is called seemingly unrelated, although some authors suggest that the term seemingly related would be more

appropriate since the error terms are assumed to be correlated across the equations.

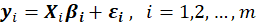

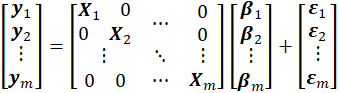

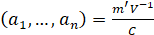

Consider a system of m equations, where the ith equation is of the form

Where

is a vector of the dependent variable,

is a vector of the dependent variable,

is the coefficient vector and ui is a vector of the disturbance terms of the ith equation.

is the coefficient vector and ui is a vector of the disturbance terms of the ith equation.

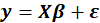

We can write

or more simply as

.

.

We assume that there is no correlation of the disturbance terms across observations.

We explicitly allow for contemporaneous correlation

, where

, where

and

and

indicate the equation number. However, we explicitly allow for contemporaneous correlation.

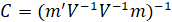

The covariance matrix of all disturbances is

indicate the equation number. However, we explicitly allow for contemporaneous correlation.

The covariance matrix of all disturbances is

Where

Where

is the (contemporaneous) disturbance covariance matrix,

is the (contemporaneous) disturbance covariance matrix,

is the Kronecker product,

is the Kronecker product,

is an identity matrix of dimension

is an identity matrix of dimension

, and

, and

is the number of observations in each equation.

is the number of observations in each equation.

The equations can be consistently estimated by seemingly unrelated regression (SUR).

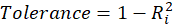

This estimation can be obtained by

.

.

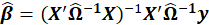

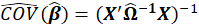

The covariance matrix of these estimators can be estimated by

.

.

Since the (true) disturbances (

)of the estimated equations are generally not known, their covariance matrix cannot be determined.

Therefore, this covariance matrix is generally calculated from estimated residuals

(

)of the estimated equations are generally not known, their covariance matrix cannot be determined.

Therefore, this covariance matrix is generally calculated from estimated residuals

(

)

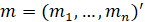

that are obtained from a first-step OLS estimation. The residual covariance matrix is calculated

)

that are obtained from a first-step OLS estimation. The residual covariance matrix is calculated

Where

and

and

are the number of independent variables in equation

are the number of independent variables in equation

and

and

, respectively.

, respectively.

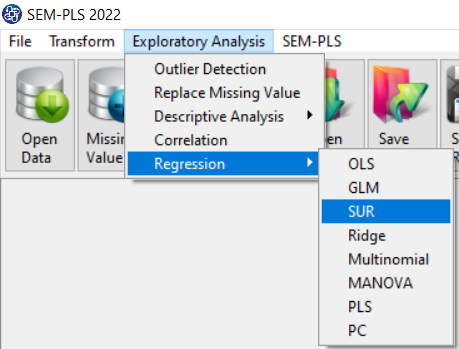

Path of SUR Regression:

You can perform SUR(Seemingly Unrelated Regressions) regression by the following path:

1-Exploratory Analysis

2-Regression

3-SUR

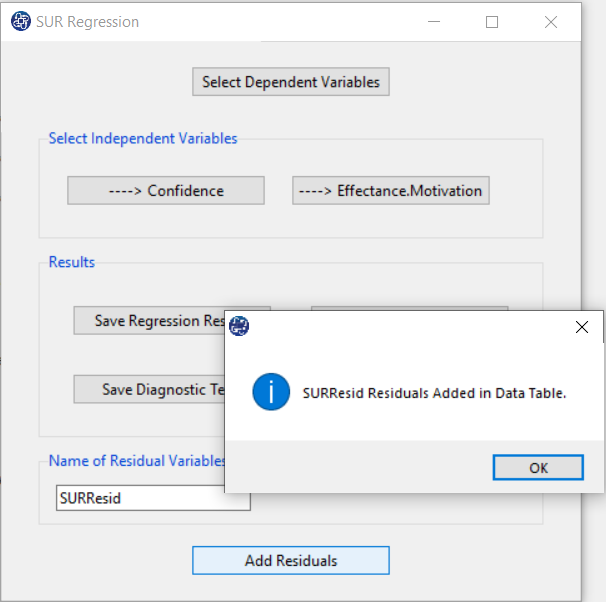

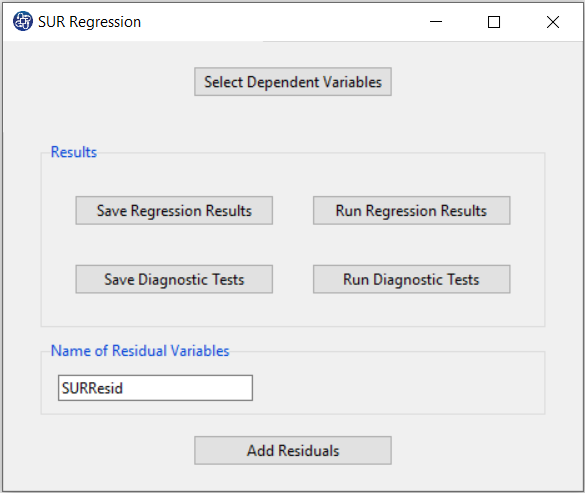

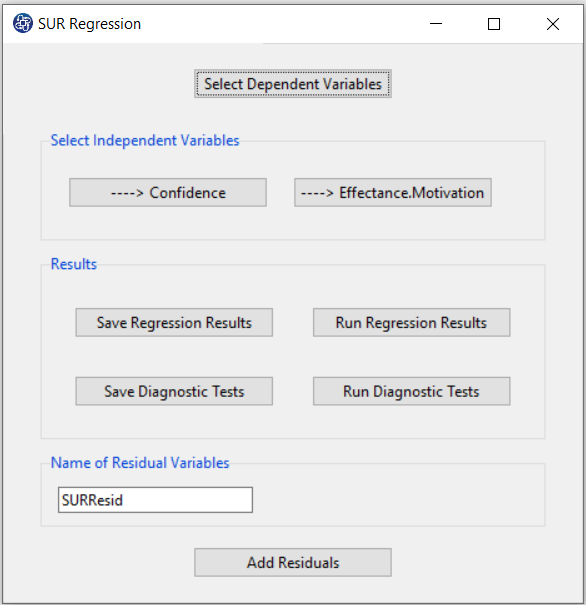

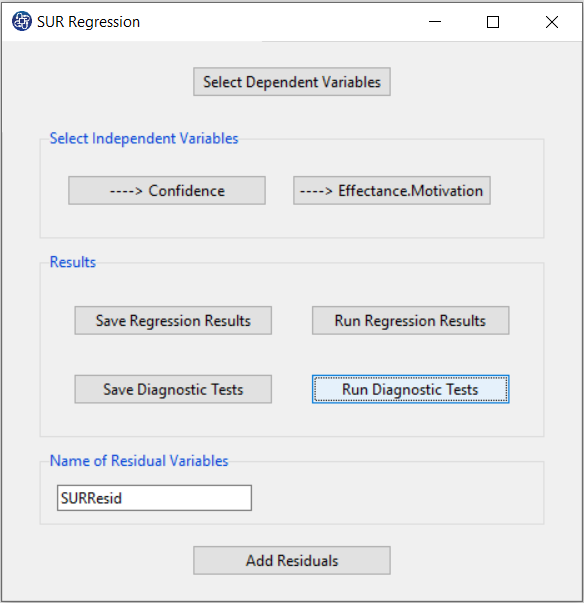

A. SUR Regression window:

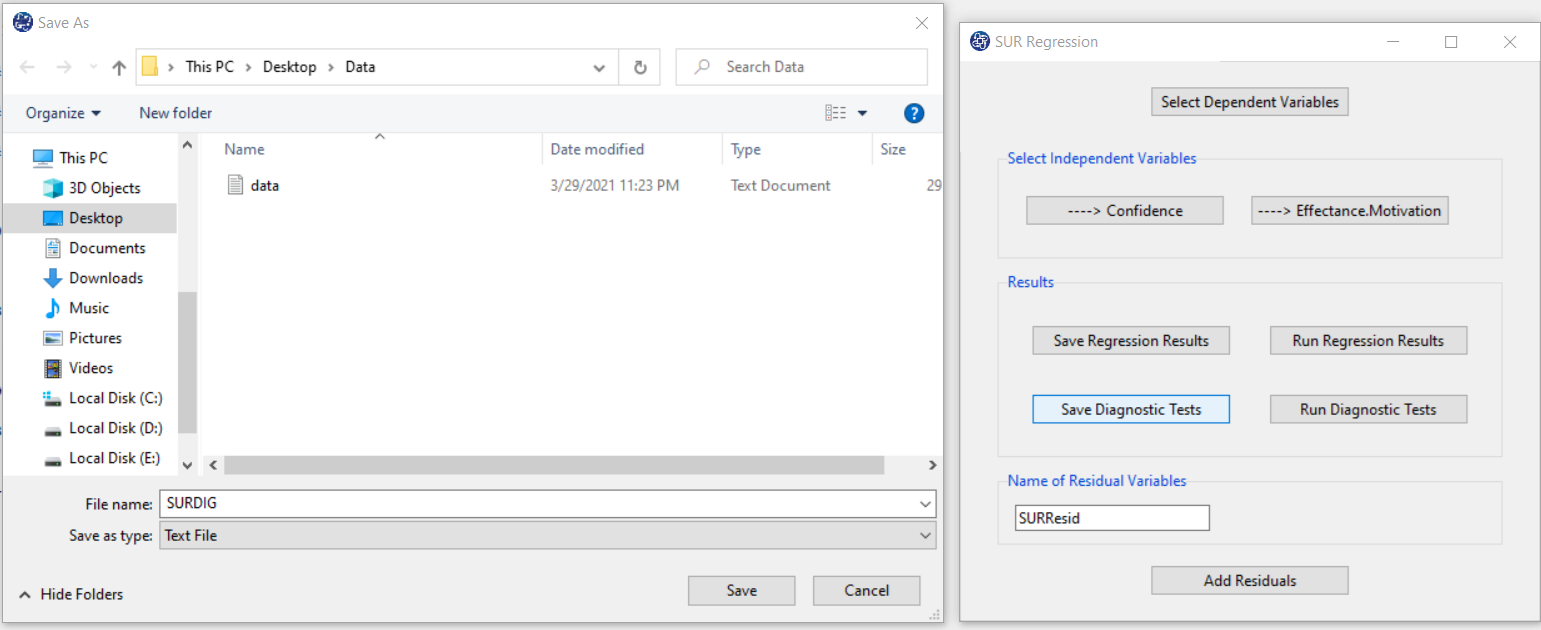

This window includes the Select Dependent Variable button, the results button, and the buttons for

saving the residuals of model. Defining models is done in two steps:

1- Define dependent variables

2-Select independent variables for each dependent variable defined in the first step.

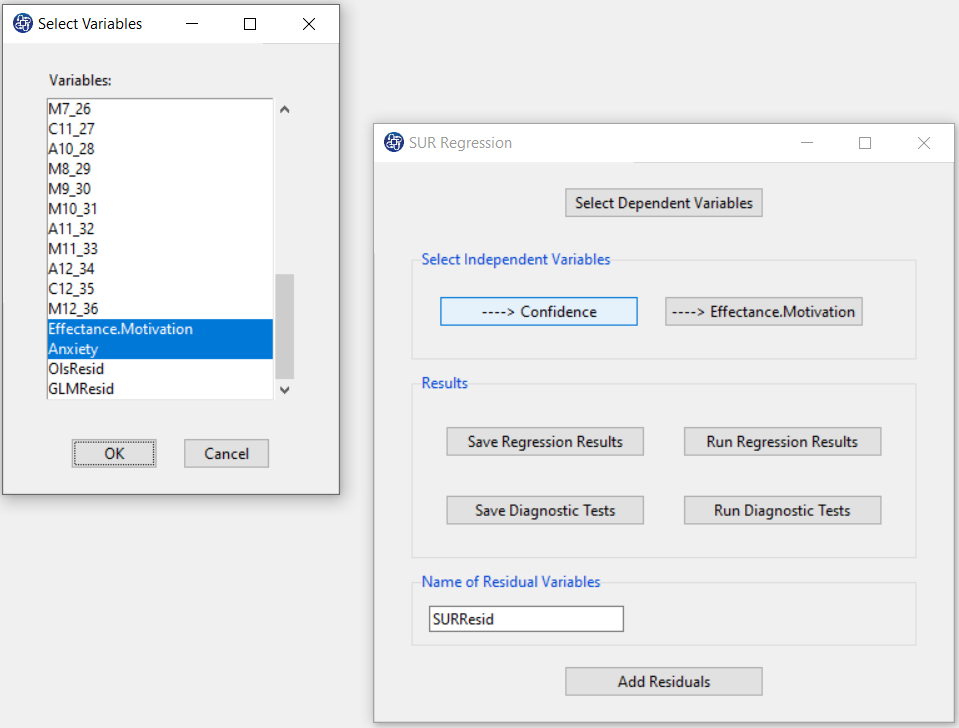

B. Select Dependent Variables:

You can select the dependent variables through this button. After opening the window, you can select

it by selecting the desired variables.

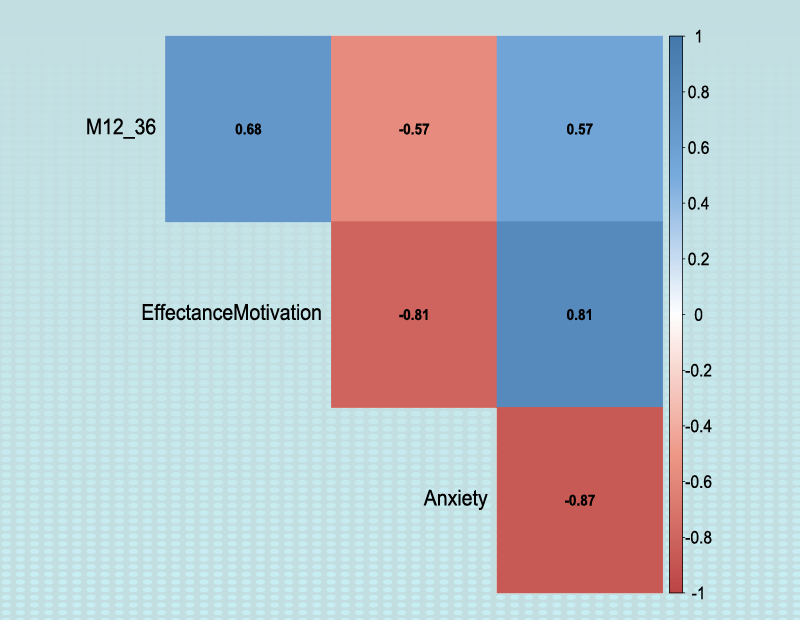

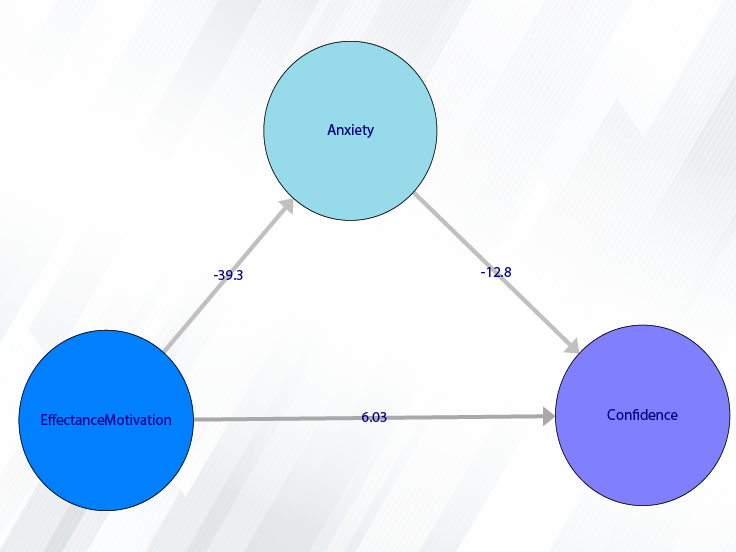

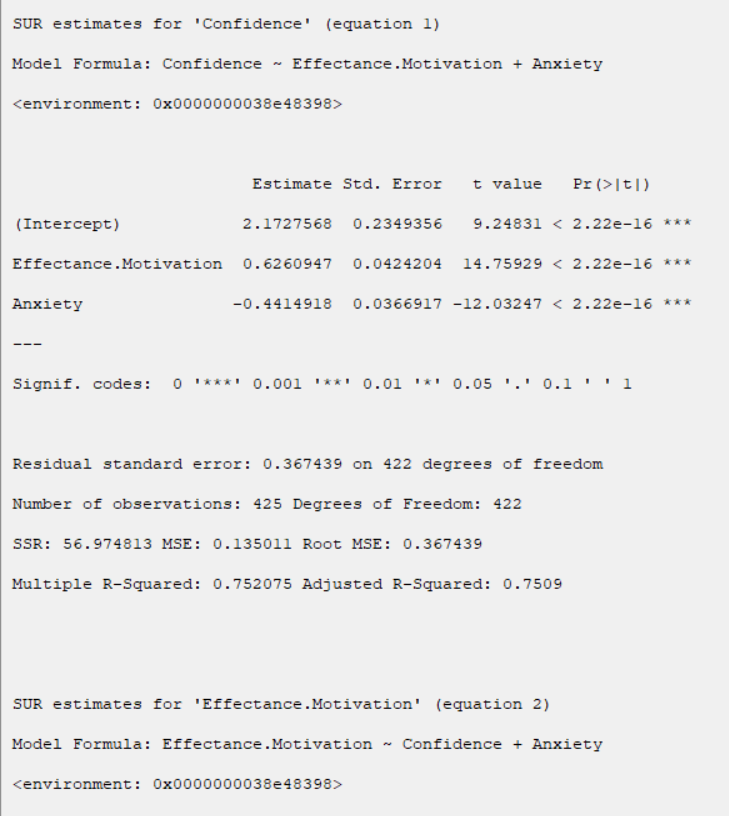

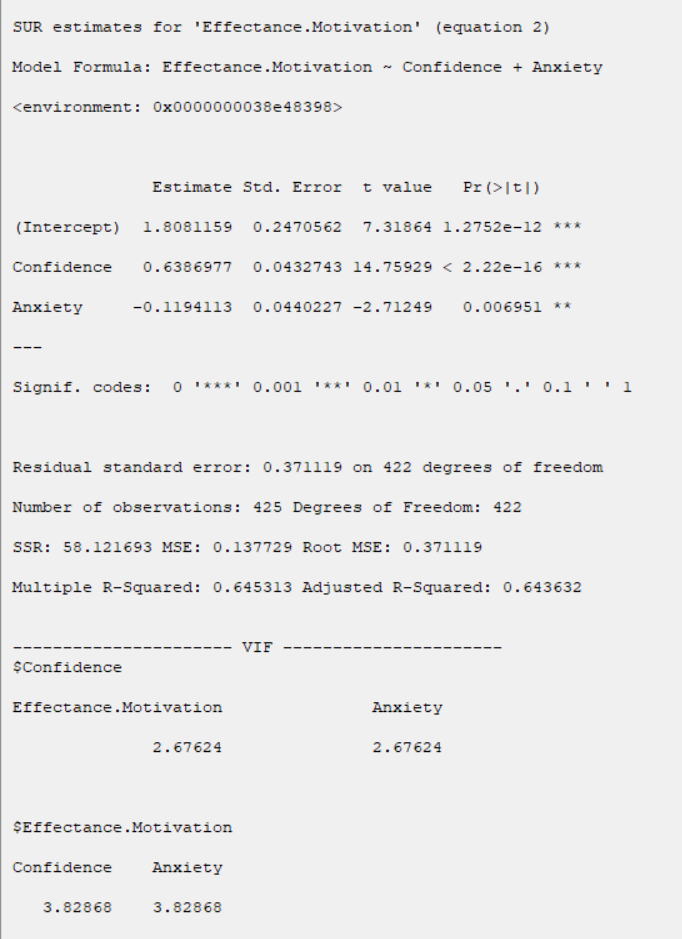

For example, the variable Confidence and Effectance.Motivation are selected in this data

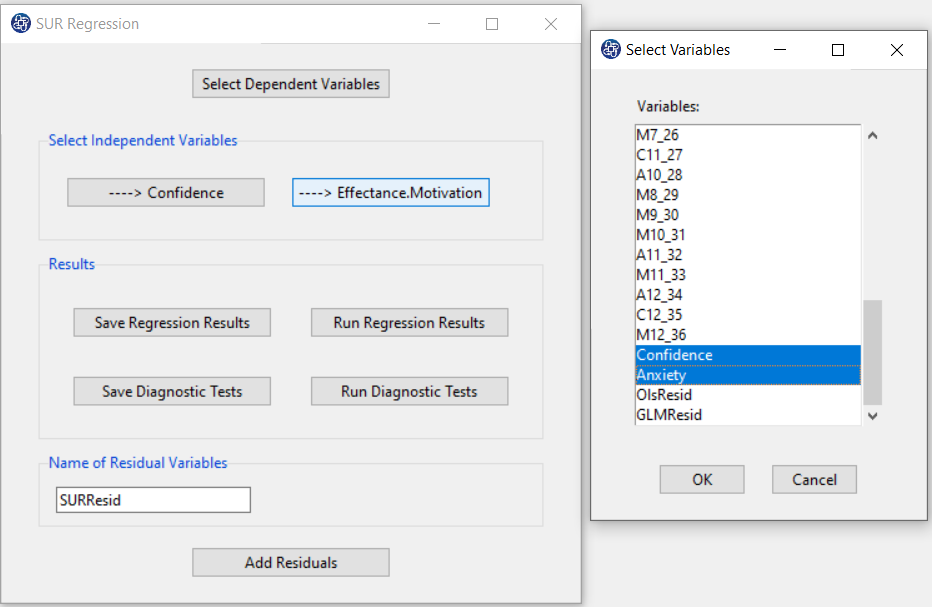

C. Select Independent Variables panel:

In the SUR Regression window, after selecting dependent variables, a button is activated to select independent variables for each dependent variable.

D. Select Independent Variables:

You can select the independent variables through this button. After the window opens,

you can select them by selecting the desired variables.

For example, for the Confidence variable, the variables Effectance Motivation and Anxiety are

selected in this data and for the Effectance Motivation variable the variables Confidence and Anxiety are selected.

E. Run Regression:

You can see the results of the OLS regression in the results panel by clicking this button.

Results include the following:

-Model Summary

-Parameter Estimates

-Collinearity Diagnostics

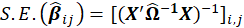

Model Summary:

In the first part, the general indexes of the models are as follows:

Where

is sample size and

is sample size and

is the number of dependent variables (equations).

is the number of dependent variables (equations).

Where

is number of dependent variables (equations) and

is number of dependent variables (equations) and

is the number of independent variables in equation

is the number of independent variables in equation

.

.

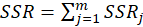

Where

is SSR of equation

is SSR of equation

.

.

detRCov:

This index is

i.e. The determinant of

i.e. The determinant of

matrix. Where

matrix. Where

is the covariance matrix of residuals and |.| is the determinant of a matrix.

is the covariance matrix of residuals and |.| is the determinant of a matrix.

OLS-R2:

This index is

i.e. mean of OLS

i.e. mean of OLS

( Coefficient of determining models after fitting by OLS method.).

( Coefficient of determining models after fitting by OLS method.).

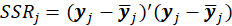

McElroy-R2:

The goodness of fit of the whole system can be measured by the McElroy’s

values

values

In the second part, the indexes of each model are presented:

N:

N value is equal to the sample size i.e.

Where

is sample size.

is sample size.

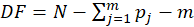

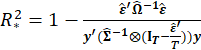

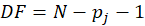

DF:

This index is obtained from the following equation:

Where

is the number of independent variables in equation

is the number of independent variables in equation

.

.

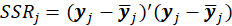

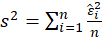

SSR:

This index is obtained from the following equation:

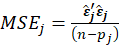

MSE:

This index is obtained from the following equation:

Where

is the number of independent variables in equation

is the number of independent variables in equation

.

.

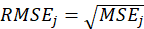

RMSE:

This index is obtained from the following equation:

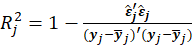

R2:

The goodness of fit of each single equation can be measured by the traditional

values

values

Where

is the

is the

value of the jth equation and

value of the jth equation and

is the mean value of

is the mean value of

.

.

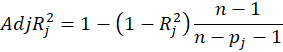

Adj R2:

This index is obtained from the following equation:

The covariance matrix of the residuals used for estimation:

Since the (true) disturbances (

) of the estimated equations are generally unknown, their covariance matrix cannot be determined.

Therefore, this covariance matrix is generally calculated from estimated residuals (

) of the estimated equations are generally unknown, their covariance matrix cannot be determined.

Therefore, this covariance matrix is generally calculated from estimated residuals (

) that are obtained from a first-step OLS estimation.

) that are obtained from a first-step OLS estimation.

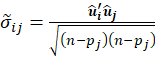

The residual covariance matrix is calculated

Where

and

and

are the number of independent variables in equation

are the number of independent variables in equation

and

and

, respectively.

, respectively.

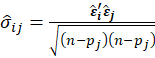

The covariance matrix of the residuals:

The residual covariance matrix is calculated

Where

and

and

are the number of independent variables in equation

are the number of independent variables in equation

and

and

, respectively.

, respectively.

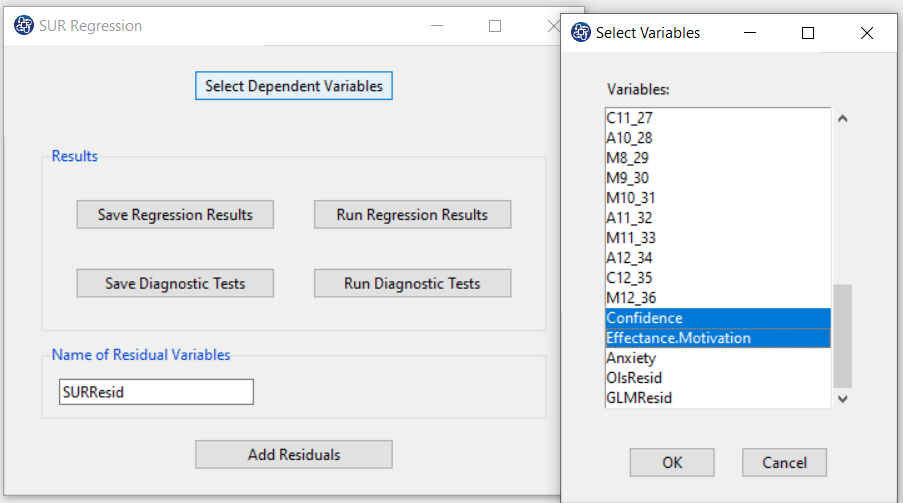

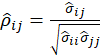

The correlations of the residuals:

The components of this matrix are defined as follows:

-Parameter Estimates

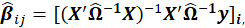

For each equation, the coefficients presented in this table are obtained from the following relationships:

*Estimate:

*Std. Error:

*t value:

*Pr(>|t|):

P-Value=Pr(|t|>tn-p-1)

*Collinearity Diagnostics:

In the Collinearity Diagnostics table, the results of multicollinearity in a set of multiple regression

variables are given.

For each independent variable, the VIF index is calculated in two steps:

STEP1:

First, we run an ordinary least square regression that has xi as a function of all the other explanatory

variables in the first equation.

STEP2:

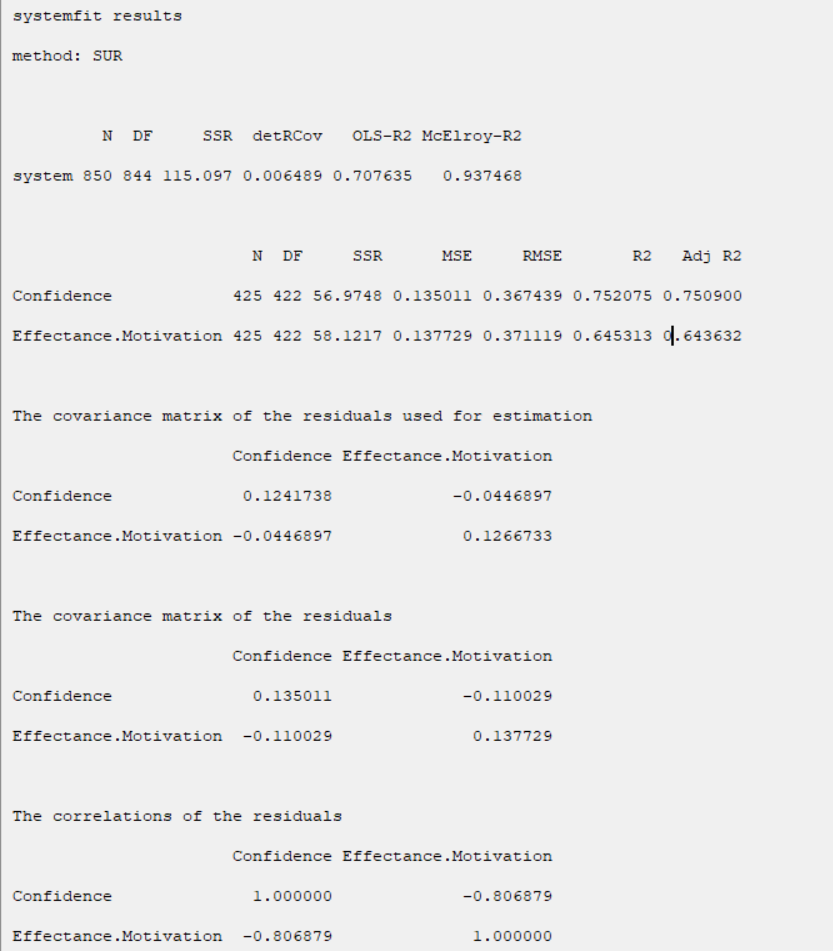

Then, calculate the VIF factor with the following equation:

Where

is the coefficient of determination of the regression equation in step one. Also,

is the coefficient of determination of the regression equation in step one. Also,

A rule of decision making is that if

then multicollinearity is high (a cutoff of 5 is also commonly used)

then multicollinearity is high (a cutoff of 5 is also commonly used)

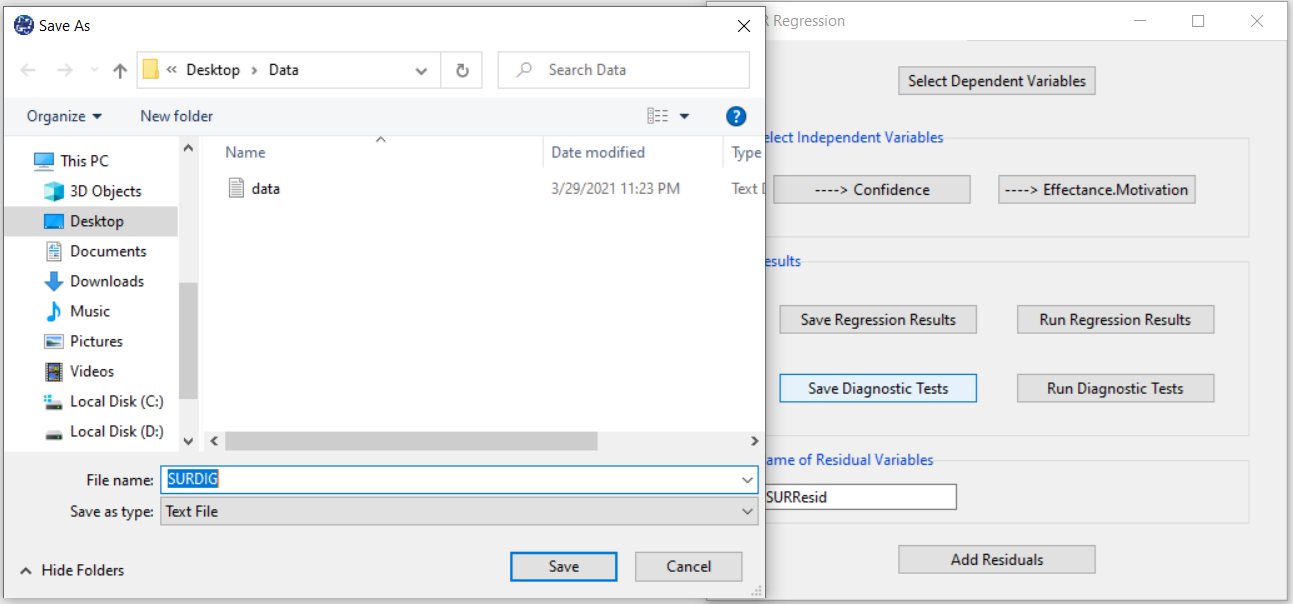

F. Save Regression:

By clicking this button, you can save the regression results. After opening the save results window, you can save the results in “text” or “Microsoft Word” format.

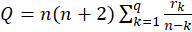

G. Run Diagnostic Tests:

In SUR Regression, For each equation, we shall make the following assumptions:

1-The residuals are independent of each other.

2- The residuals have a common variance

3- It is sometimes additionally assumed that the errors have the normal distribution.

For example, if the residuals are normal, the correlation test can replace the independence test.

The following tests are provided to test these assumptions:

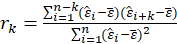

* Test for Serial Correlation:

-Box Ljung:

The test statistic is:

Where

The sample autocorrelation at lag

and

and

is the number of lags being tested. Under

is the number of lags being tested. Under

,

,

.

.

In this software, we give the results for q=1.

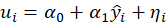

*Test for Heteroscedasticity:

-Breusch Pagan:

The test statistic is calculated in two steps:

STEP1:

Estimate the regression:

Where

,

,

STEP2:

Calculate the

test statistic:

test statistic:

Under

,

,

.

.

*Normality Tests:

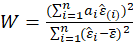

-Shapiro Wilk:

The Shapiro-Wilk Test uses the test statistic

Where the

values are given by:

values are given by:

is made of the expected values of the order statistics of independent and identically distributed random

variables sampled from the standard normal distribution; finally, V is the covariance matrix of those normal order statistics.

is made of the expected values of the order statistics of independent and identically distributed random

variables sampled from the standard normal distribution; finally, V is the covariance matrix of those normal order statistics.

is compared against tabulated values of this statistic's distribution.

is compared against tabulated values of this statistic's distribution.

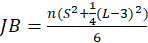

-Jarque Bera:

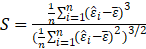

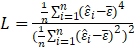

The test statistic is defined as

Where

Under

,

,

.

.

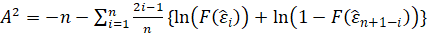

-Anderson Darling:

The test statistic is given by:

Where F(⋅) is the cumulative distribution of the normal distribution. The test statistic can then be

compared against the critical values of the theoretical distribution.

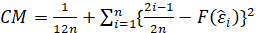

-Cramer Von Mises:

The test statistic is given by:

Where F(⋅) is the cumulative distribution of the normal distribution. The test statistic can then

be compared against the critical values of the theoretical distribution.

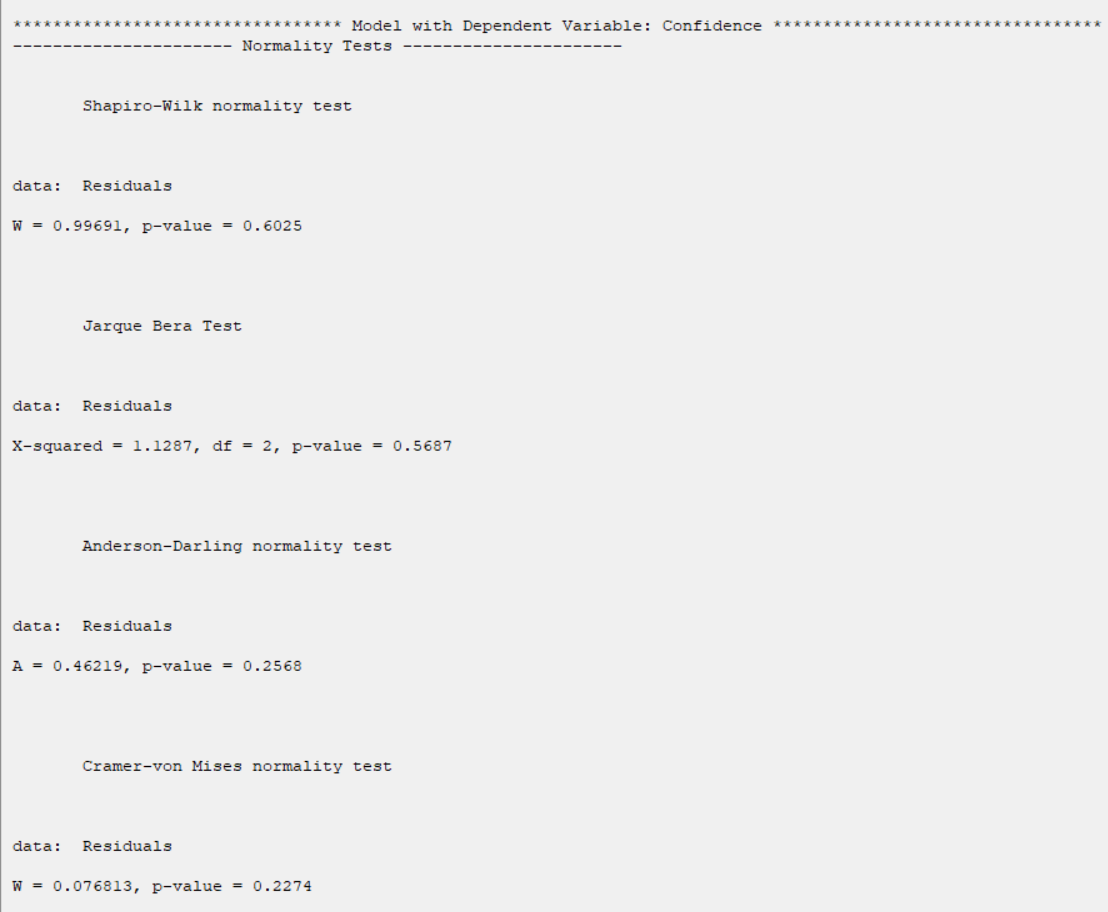

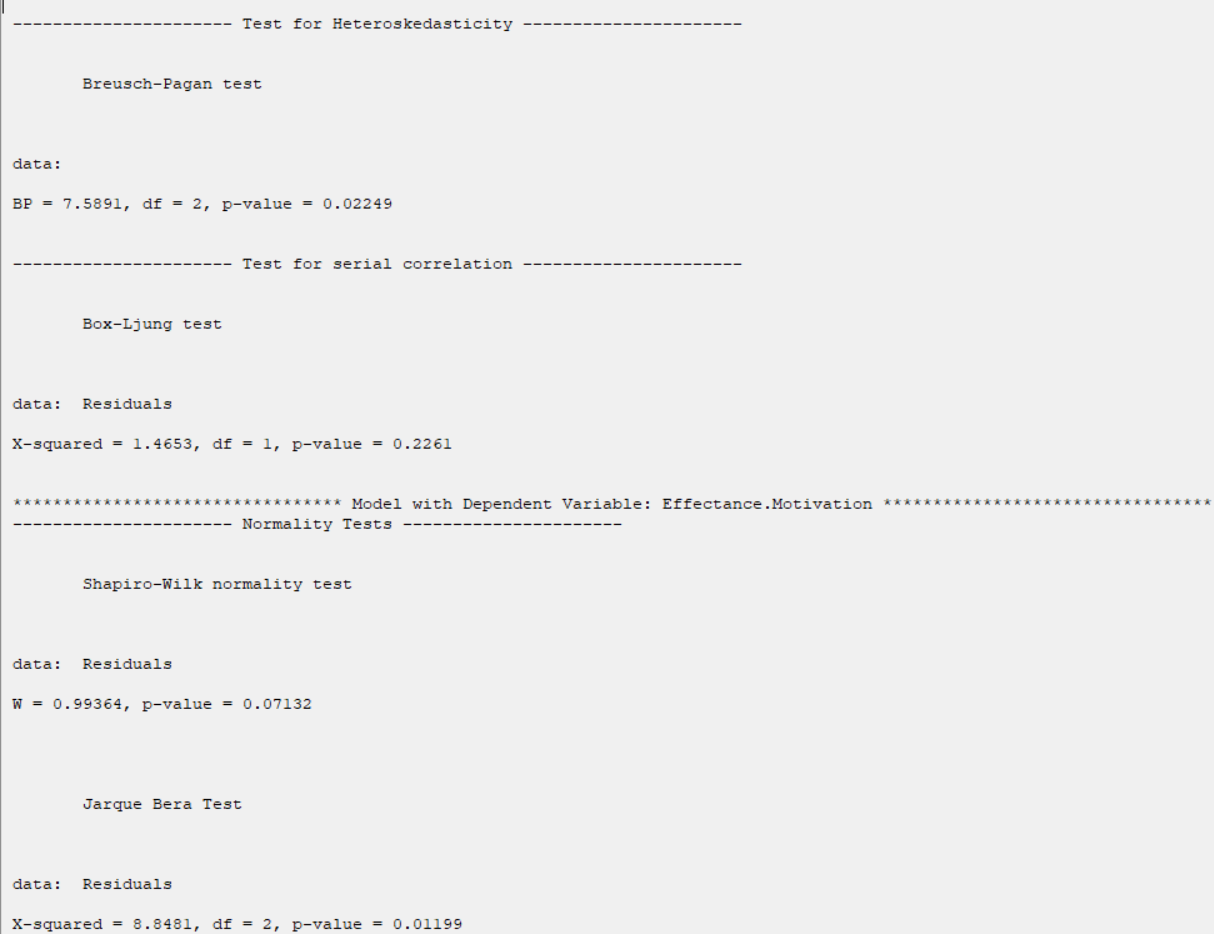

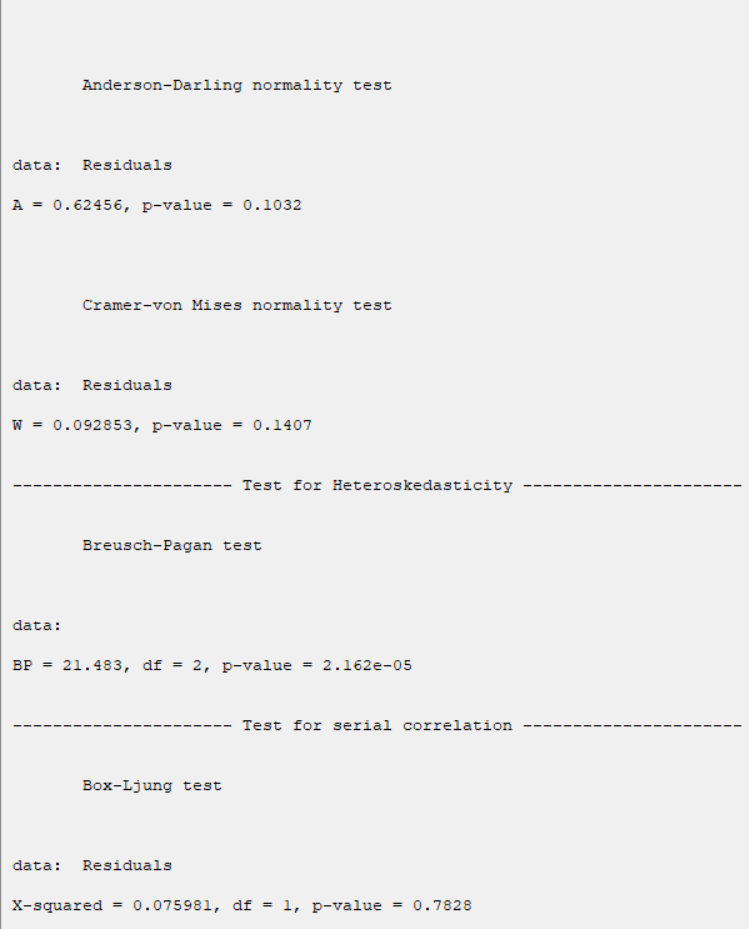

G.1. Result of Diagnostic Tests:

The test results for each face model are broken down as follows:

*****Model with Dependent Variable "model dependent variable name ”**********

The results show that in all tests, the P-value is greater than 0.05 (Except in Heteroskedasticity,

the first model). Therefore, at 0.95 confidence level, the normality, homogeneity of variance,

and independence of the regression model residues(Except in Heteroskedasticity, the first model) are confirmed.

H. Save Diagnostic Tests:

By clicking this button, you can save the results of the diagnostic tests. After opening the save results window, you can save the results in “text” or “Microsoft Word” format.

I. Add Residuals & Name of Residual Variable:

By clicking on this button, you can save the remnants of the regression equations with

the desired name(Name of Residual Variable). The default software for the residual names is “SURResid”.

This message will appear if the residuals are saved successfully:

“Name of Residual Variable Residuals added in Data Table.”

For example,

“SURResid Residuals added in Data Table.”.